DevSecOps: Ensuring security throughout the pipeline

Intro

At Kainos, DevOps methodologies are at the core of how we work - the foundations of every project we deliver and product we build are structured around best of breed DevOps practices from the outset.

Another key tenet we integrate tightly with our DevOps practices are 'Security by Design' principles. These principles define how we consider Security as intrinsic to the very core of the early solution design and build process, rather relegating it to the outer layers of the solution and left as a To-Do, to be developed sometime between MVP functional readiness and the launch of the service.

As we're all too aware, 'Security' is never a one-time tick-box exercise you simply complete and forget about - as time progresses, new and unexpected security issues will continually come to light from a multitude of sources (some of them more surprising than others, like Log4J). Once a security breach has occurred, there is no turning back the clock - the data is out there, and even recovering from the reputational damage alone can be next to impossible for organisations unfortunate enough to be involved.

This blog describes approaches for integrating Security tooling into your DevOps practices and CI/CD pipelines. We believe that, when correctly implemented alongside Secure by Design application design and development practices, these additional layers help ensure we're protecting the data, assets and reputation of our clients and organisation as a whole from early on in the development process.

Build Time Validation

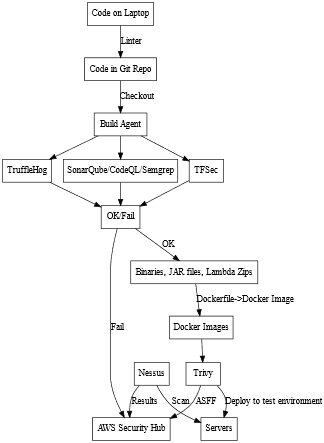

If we think about the stages between “code on a developer’s laptop” and “code in production,” it should be clear that we can find some points along the pipeline that we can add tools to enhance security.

Using the above image as an example pipeline, I have added a number of tools along the path of the pipeline, each that enhances overall security in a specific way. Running a linter on a pre-commit hook, or as part of the post-push step in the Git repo is more good practice than specifically security, but messy code is a good place for security issues to hide.

Trufflehog searches for secrets and sensitive text within Git commit history. It can also identify high-entropy text, which might indicate a password hash buried in the code. A significant amount of data breaches occurred because of inadvertently committed secrets in public repositories.

SonarQube, CodeQL and Semgrep are three tools which all perform Static Analysis and Security Testing (SAST). In many of the projects that I have worked on, SonarQube has been the tool of choice, but from a brief straw poll on Twitter this week, it seems CodeQL and Semgrep are also popular.

Infrastructure as Code analysis is a new feature for SonarQube, only supported in the most recent version, which is why the above pipeline also includes TFSec, a Terraform static analyser, which can output its findings as Junit files, making integration into reporting trivial. Execution of the pre-build tools happens in parallel, however all three must successfully complete before the build can progress.

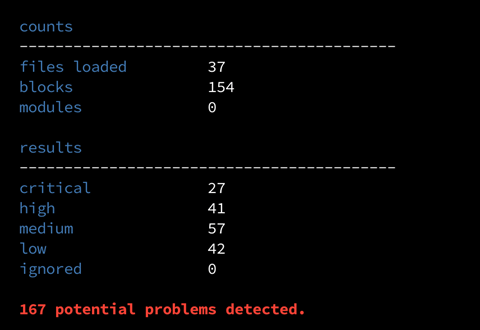

The Junit reporting from TFSec presents as following for a ‘vulnerable-by-design’ application I have been testing this pipeline with.

In this case, failed tests are exactly what I want to see! TFsec also provides informational links on how to remediate the issues.

TFsec also supports exporting in SARIF format for ingestion into GitHub Security, should that be a requirement for your project.

Docker Image Scanning

Once the approvals from the above tools have succeeded, and the docker image has been built, but before it gets pushed to a repository, we can scan it for vulnerabilities included by the base image (if not building from scratch). Whilst that functionality is also offered by AWS ECR (after push to repository), and by Amazon Inspector, there is an opportunity to scan before push, thereby saving time, and storage resources too.

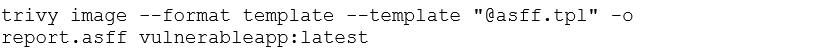

Enter Trivy (https://github.com/aquasecurity/trivy), another open-source utility. Trivy is highly customisable from the perspective of reporting / outputs. In my demo pipeline I have it using a provided template for generating a report that can be uploaded to AWS Security Hub in ASFF format.

Which is then uploaded to Security Hub directly from the build agent.

![]()

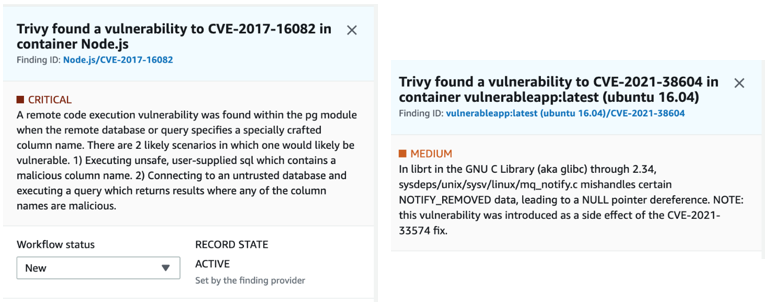

The findings are then available for search and reporting via Security Hub like this:

On the left, Trivy detected a vulnerability within the application code, having utilised an outdated version of Nodejs, and on the right, a glibc vulnerability from an obsolete version of Ubuntu having been chosen as the base image for the Dockerfile.

Deployment and Vulnerability Scanning

One final stage of the pipeline is to deploy the application stack to a disposable, isolated environment, ideally in a separate AWS account, or at the very least, in a separate VPC (Virtual Private Cloud). From there, run a holistic vulnerability scan across the entire application using Amazon Inspector. Inspector can automatically scan all EC2 instances within an account on a scheduled basis for vulnerabilities. An alternative that I have seen used frequently is Nessus, but there are a multitude of open-source alternatives too. I frequently use OpenVAS on personal projects for this very purpose.

One recent project I worked on, I took the post-deployment scanning stage one step further and wrote a wrapper around OWASP ZAP (Zed Attack Proxy) which ran in AWS Fargate, so a HTTP POST request containing the URL for the recently deployed service would return a report generated by ZAP which was included into the artifacts for the pipeline run. The final stage of the build pipeline was a bash script that ran cURL to trigger the ZAP scan.

Pipeline Infrastructure Security

My demo pipeline for this article runs on a hosted agent within our AWS account, which presents another point for discussion, security of the hosted agent and tooling.

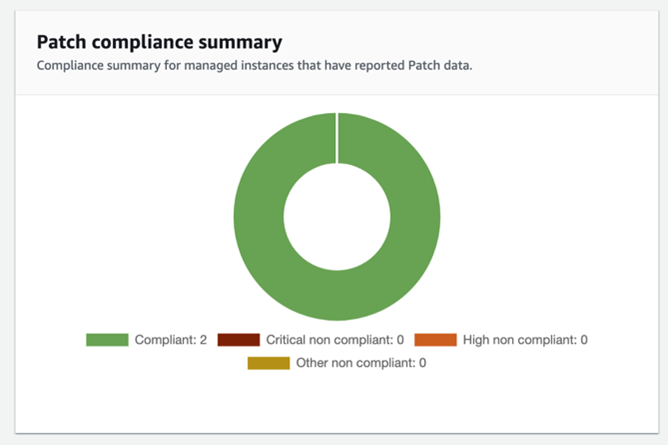

I have configured the Patch Manager feature within AWS Systems Manager to configure Maintenance Windows (for a build/deploy pipeline, this can be when nobody is working; like from 01:00 to 04:00).

Then the EC2 instances are added to a Patch Group and assigned a baseline patch set for the relevant OS. My instances on this demo are all Ubuntu, so they have the AWS-UbuntuDefaultPatchBaseline applied.

The significant benefit of this is that these instances will have security patches applied as a process of continuous preventative maintenance, at a time when the system is unused. In addition to the instances being automatically updated, it also removes another manual task for administrators to perform, lowering the total cost of maintenance.

Conclusion

There are a multitude of tools and utilities, some provided by AWS, some 3rd party COTS products, as well as many open-source utilities which can enhance security visibility across each step of your pipeline builds. Incorporating these tools into DevOps practices, integrating them into automation pipelines can provide teams with an in-depth insight into the wider implications of the security landscape their code exists within.

Given the scope for these tools to reduce non-compliance – either at build-time by failing the build, or at deploy-time by updating findings in AWS Security Hub – the benefits of designing these security measures into your pipelines from the beginning should quickly justify the additional effort required to implement.